Good morning, The AI Night readers. Stanford stacked silicon into skyscrapers. Liquid AI made LLMs run twice as fast on a phone CPU. And the industry just burned through $61 billion on data centers that might already be overkill. Welcome to the efficiency pivot.

In today’s The AI Night:

Stanford unveils 3D chip that demolishes AI's memory bottleneck

Ex-Facebook privacy chief predicts AI efficiency pivot as data center deals hit $61B

Liquid AI drops fastest on device “LFM2” foundation models on the market

Latest News

Stanford AI

Stanford unveils 3D chip that demolishes AI's memory bottleneck

Stanford researchers have created a new kind of 3D computer chip that stacks memory and computing elements vertically, dramatically speeding up how data moves inside the chip. Unlike traditional flat designs, this approach avoids the traffic jams that limit today’s AI hardware

The details:

Outperforms comparable chips by several times in benchmark tests.

Addresses the "memory wall" where data transfer not compute power, throttles AI performance.

Built entirely in a U.S. foundry, demonstrating production readiness

Collaboration between Stanford, Carnegie Mellon, UPenn, and MIT.

Why it matters:

Current AI scaling is bottlenecked not by FLOPS but by bandwidth, how fast data moves between memory and processors. This vertical "Manhattan of computing" approach could reshape deployment economics by reducing reliance on massive GPU clusters. The U.S.-manufactured angle also addresses supply chain security concerns as infrastructure competition with China intensifies. Whether this translates to commercial production remains to be seen, but the architectural validation is significant.

AI

Ex-Facebook privacy chief predicts AI efficiency pivot as data center deals hit $61B

Source: From CNBC

Former Facebook CPO Chris Kelly told CNBC that AI companies will pivot toward efficiency in 2026, citing unsustainable power demands amid a $61 billion data center construction frenzy.

The details:

OpenAI's infrastructure commitments exceed $1.4 trillion over coming years

A single 10 gigawatt data center partnership equals power consumption of 8 million U.S. households

DeepSeek launched a competitive open-source LLM for under $6 million, a fraction of U.S. competitors' costs

Trump's approval of NVIDIA H200 chip sales to China could accelerate Chinese open source efforts

Why it matters:

Energy has become AI's binding constraint. Kelly's observation that human brains run on 20 watts while AI demands gigawatt facilities underscores the inefficiency gap. DeepSeek's low cost model already proved efficiency gains are achievable. If Kelly's prediction holds, 2026 could favor architecturally efficient models over brute force scale reshaping competitive dynamics and potentially giving Chinese players an opening.

Liquid AI

Liquid AI drops fastest on device “LFM2” foundation models on the market

Source: From Liquid AI

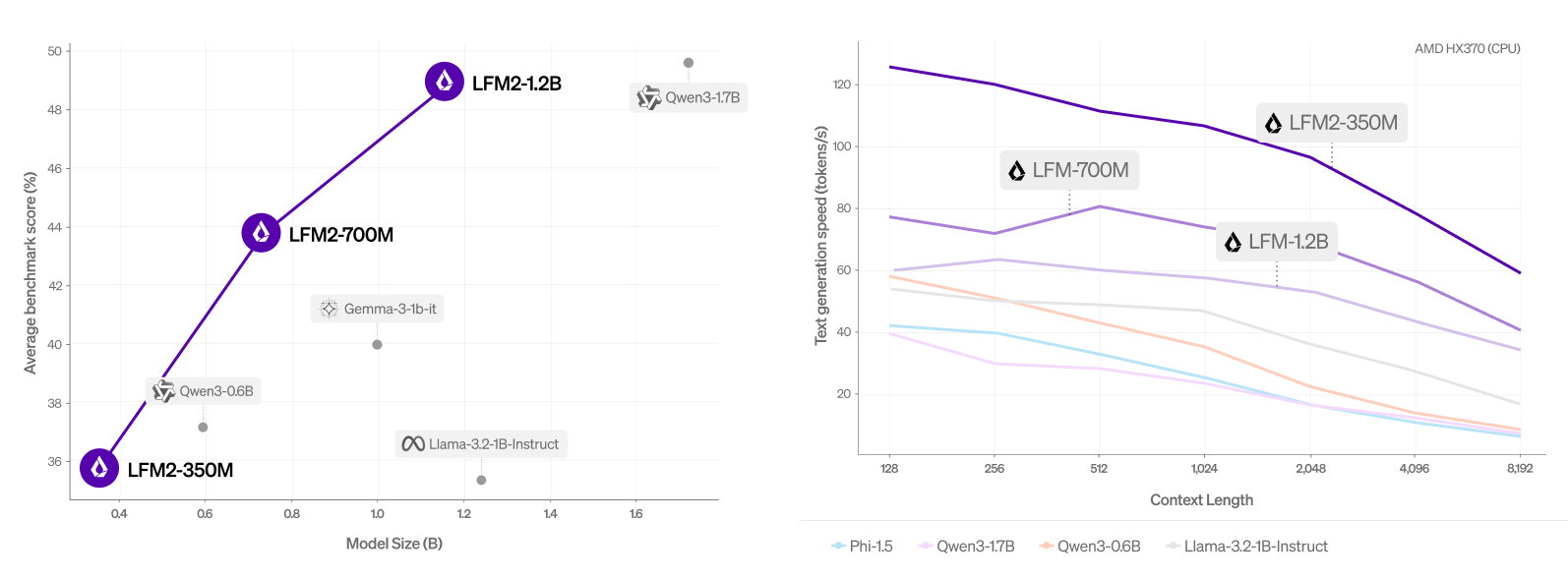

Liquid AI released a new class of Liquid Foundation Models (LFMs) that sets a new standard in quality, speed, and memory efficiency deployment. LFM2 is specifically designed to provide the fastest on device gen AI experience across the industry, thus unlocking a massive number of devices for generative AI workloads.

The details:

Three model sizes: 350M, 700M, and 1.2B parameters.

New hybrid architecture: 10 gated convolution blocks + 6 grouped query attention blocks.

3x training efficiency improvement over previous generation

Open license (Apache 2.0-based) free for research and commercial use under $10M revenue.

Why it matters:

As the industry grapples with power constraints and latency demands, Liquid is betting that the future of AI runs locally. LFM2 targets phones, laptops, vehicles and robotics, ,endpoints that need real time reasoning without cloud round trip

That's a satisfactory wrap for tonight.

Before you go, how did we do? Your feedback shapes tomorrow's edition.

See you soon